Paint your Zoom Background: Portrait Segmentation for Video Stylization

- Neural Style Transfer

- Motivation

- Approach

- Implementation

- Results

- Future Work

- Presentation

- Code

- Footnotes

This page serves as the online companion to Yiwei Zhang’s final project for the course CS 639: Computer Vision in Fall 2020.

Neural Style Transfer

The term image style transfer, or alternatively image stylization, refers to the image processing task that extracts the feature of style of a set of images, and apply such style onto another set of images. Although this kind of style transfer operation has achieved good performance when the to-be-stylized images are landscape photographs, it sometimes meet limitations when the photographs contain other elements, for example, a photo that contains a portrait. By noticing this issue, this peoject comes up with the idea that developing a better image style transfer method for photograph that contains both portrait and landscape. Applying this image style transfer method to Zoom videos, we can get a video style tansfer.

Style

Content

Result

Motivation

Most of us are having courses online this semester. Some of the courses are held upon Zoom and involve video chat. There are two modes of virtual background in Zoom.

Current Zoom Background

a

The first one suppose you have a flat and solid color background. It will replace the major color of your backgound to a static picture. The problem with this mode is that it will face problems when the background is messy.

b

The second one involves the process of segmenting the portrait from the background. It will detect the person segmentation and replace the rest with a static picture. The problem with this mode is that it loses the detail of the background and is not fun or dynamic.

Goal

The goal of this project is to propose and implement a video style transfer method that can transfer the style of video background while keeping the portrait.

Approach

-

Dump frames and audio file from the video file.

-

Generate person segmentation masks.

-

Apply neural style transfer on dumped frames.

-

Combine masks and stylized frames, mask out person or background.

-

Create result videos.

Implementation

Pipeline

Firstly, the person segmentation mask can be obtained using the state-of-the-art method in Pytorch. Secondly, the transfer result for the whole picture can be obtained using the style transfer model. Finally, combining the mask with the stylized frame, we can get the final result.

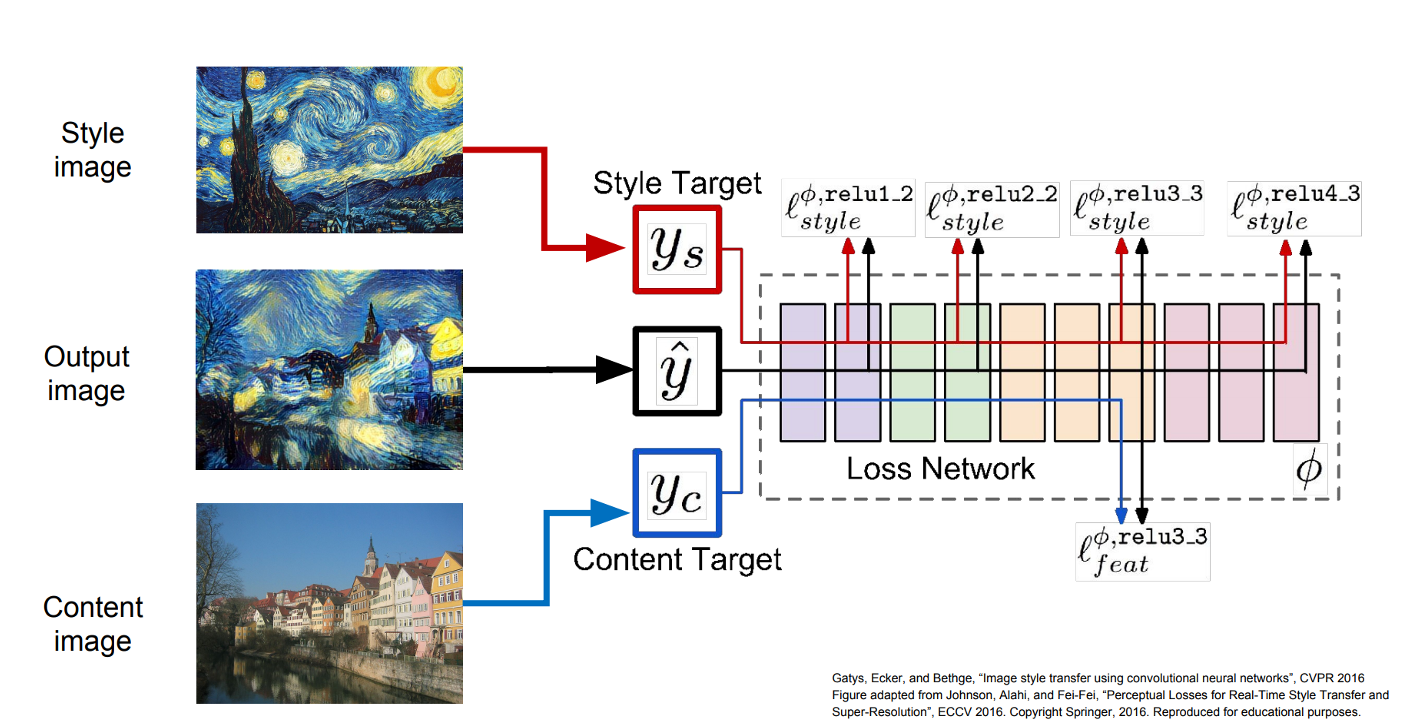

Neural Style Transfer Model

Results

Original Video

Fully Transfered Video

Partially Transfered Video

Combined Result Video

Future Work

- Apply image blending near the boundary.

- Increase inference speed and decrease computation cost for real-time deployment.

Presentation

Code

Footnotes

-

Johnson, J., Alahi, A., & Fei-Fei, L. (2016). Perceptual Losses for Real-Time Style Transfer and Super-Resolution. ECCV.

-

Chen, L., Papandreou, G., Schroff, F., & Adam, H. (2017). Rethinking Atrous Convolution for Semantic Image Segmentation. ArXiv, abs/1706.05587.

-

Chen, L., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. (2018). Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. ECCV.

-

Shen, X., Hertzmann, A., Jia, J., Paris, S., Price, B.L., Shechtman, E., & Sachs, I. (2016). Automatic Portrait Segmentation for Image Stylization. Computer Graphics Forum, 35.

-

Gatys, L.A., Ecker, A.S., & Bethge, M. (2016). Image Style Transfer Using Convolutional Neural Networks. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2414-2423.